DISCLAIMER: The comparisons shown in the following figures do not illustrate the general performance of the different compilers, but the performance of a particular version of the used compilers with this particular testcases and implemented with the knowledge of a MSc student. Performance on real applications or in codes implemented by experts in the area could change dramatically across implementations. We discourage users from using the information published here for other purposes. In addition, the accULL compiler is a research implementation which should not be used for professional purposes. The views and opinions expressed in this article are those of the author and do not

necessarily reflect the official policy or position of the accULL project, the GCAP group or the University of La Laguna.

During June and July 2012, one of our master students (Iván López) visited the EPCC at Edinburgh.

In this incredible Scottish city, you can find one of the most relevant centres of High Performance Computing.

During my stay, we made an study of the status, at the time, of different OpenACC implementations, using the resources that EPCC and the HPCEuropa2 provided to us.

We chose three codes from the Rodinia benchmark suite: HotSpot, Path Finder and a non-blocked LU decomposition, apart from a blocked matrix multiplication implementation for exploratory purposes. We used an Nvidia C2050 GPU with 3Gbs of memory, connected to a quad-core Intel i7 (2.8Ghz). The CUDA version available at the time was 4.1.

As usual in our comparisons, we try to illustrate the “time-to-solution”, thus, we include memory transfer times, but not initialisation, since it is possible to hide this cost using an external program to open the device (as the PGI compiler does).

The results obtained with the PGI Compiler tool-kit used version 12.5, which features initial OpenACC support. The version of the CAPS HMPP compiler with OpenACC support was 3.2.0, the latest available at that time.

The results obtained are shown in the previous image as percentage of the performance relative to the native CUDA implementation. For the HotSpot (HS) test case, the generated code almost reaches 70% of the native CUDA performance. However, the performance for the blocked matrix multiplication is barely a 5% of the total. It is worth noting that the chosen native implementation for the MxM is the DGEMM routine from the CUBLAS library which is highly optimised.

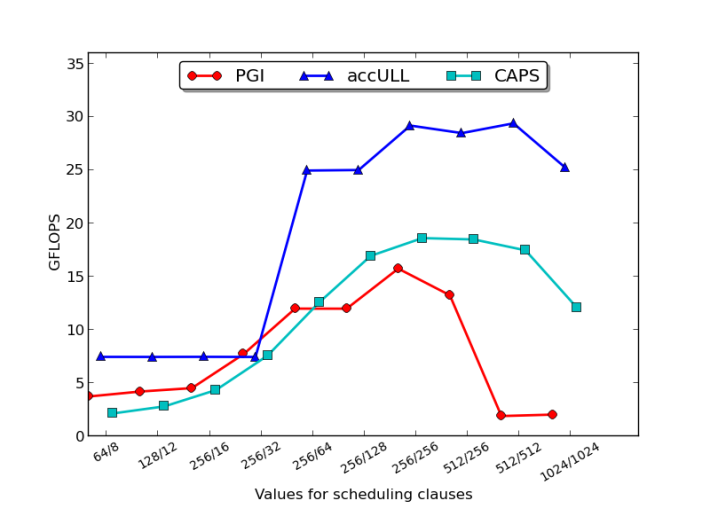

One of the most important aspects that can affect the performance is choosing the thread and kernel block configuration. OpenACC provides the gang, worker and vector clauses to enable users to manual tune the shape of the kernel.

The following graph illustrates the effect that varying the number of gang, worker and vector has on the overall performance, and how this effect varies from one compiler implementation to another.

It is important to use an appropriate combination for the different scheduling clauses in order to take the maximum performance for the different implementations, particularly with the CAPS compiler. And finally, despite the cold of Scotland and its strange animals, we could say that the time spent at the EPCC was really worth.